Rapidex English Speaking Course Book PDF in Hindi Free Download

Rapidex English Speaking Course PDF Download - This is revised version book of Rapidex Spoken English with many new Updates. The latest book of Rapidex English Speaking Course PDF of 2025 has come with newly added topics which are helpful for the students to learn Spoken English Easily. The Google Drive link of Rapidex English Speaking Course Book PDF will help the demand of the students to learn English Speaking quickly. Rapidex English Speaking Course PDF in Hindi book is a Hindi native book to learn spoken English from Hindi Language.

Contents [hide]

- 0.1 Rapidex English Speaking Course Language

- 0.2 Free Along With Rapidex English Speaking Course

- 0.3 About Rapidex English Speaking Course 2025 "Book"

- 0.4 How to start reading the book Rapidex English Speaking Course?

- 0.5 How do you get confidence in speaking English?

- 1 Download Rapidex English Speaking Course 2025 "Pdf"

- 1.1 Rapidex English Speaking Course 2025 "Book PDF Free Download"

- 1.2 Rapidex English Speaking Course 2025 "Book Preview"

- 1.3 Rapidex English Speaking Course 2025 "Download Link"

- 1.4 Watch Rapidex English Speaking Course 2024"Video Lecture"

- 1.5 Watch Rapidex English Vocabulary 2025"Dictionary"

- 1.6 Learn Rapidex Spoken English Course

- 1.7 Rapidex English speaking course book pdf

- 1.8 Download Latest Rapidex English Speaking Course PDF Updated For 2024

- 1.9 Rapidex English Speaking Course Pdf Download Link

- 1.10 Rapidex Spoken English Tamil Pdf Free Download

- 1.11 Rapidex English Speaking Course testimonials

- 2 Bestselling book ever

- 3 Rapidex English Speaking Course Educational Cassette Script

Rapidex English Speaking Course Language

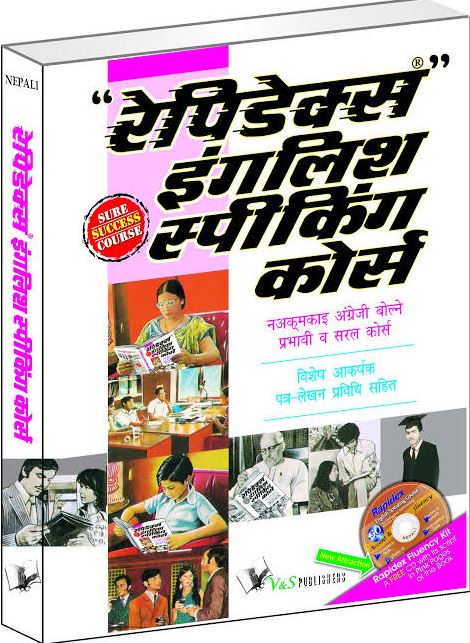

Such a book that teaches English at the convent level, pure and furious, is liked by the people of every province spread in every corner of India and every section of the society has its book mainly available in 14 languages which are in this way. Download Rapidex English Speaking Course PDF in Hindi, Malayalam, Tamil, Telugu, Kannada, Gujarati, Marathi, Bengali, Punjabi, Oriya, Urdu, Ashtami, Nepali and Arabic language from worlds famous free Spoken English Classroom Tuition.

Free Along With Rapidex English Speaking Course

- Rapidex English Spoken Course Book PDF 2025-25

- Free Rapidex Spoken English Classroom Course 2025-25

- Rapidex English Speaking Course Official Website

- Free Rapidex Word Building Course 2025-25

About Rapidex English Speaking Course 2025 "Book"

I have also uploaded the new and updated version of Rapidex English Spoken Course. If you want to download and read this book, then you can definitely download it from our website and read the Day English Speaking Course book. By taking a photo of the book and converting it to PDF, you have made it available to all people. You can read it on this new course.

Rapidex English Speaking Course is also a must-read book for people working in every customer service. As we know, customer support has to provide support to different people and talk to them and solve their problems, but many All the people who know their mother tongue but they are not able to get the help of customer support properly, at that place customer support has to support them by speaking in English because English is a global language and almost all people can understand English. If you are able to speak, that is why English is a useful and useful book for customer support, English Spoken Book PDF should be downloaded and read for free.

How to start reading the book Rapidex English Speaking Course?

Rapidex English Speaking Course is not a common course as it is a squeeze of thousands of discoveries and years of experience of expert English language teachers. This book has added a lot to it as per the reactions and suggestions of the readers. By which you will be able to realize only by reading and practicing according to the instructions given, while preparing this course of 60 days, we had put two things in front of you, one is that you will start speaking English fluently and the nature of the other language You have gradual knowledge of problems of writing, syntax, spelling, punctuation marks, etc.

Thus, this course will teach you both speaking and writing in English. This course will now cover a journey of 60 days, in which every day is a unit unit. In this way, the last day of each unit of its 6 units or units is the tenth, also the smart third etc. The practice day is also available on these days, you will also get new additional information, with the help of Exercise Tests and Tables, you will be able to take your exam subject, how much you have learned.

How do you get confidence in speaking English?

Although all the sentences included in this course are very important and useful, but some such sentences which are used frequently in day to day life and some more more popular sentences are given in bold type in the number. Due to being short, these sentences can be memorized quickly and easily. You can use them in common conversation and make a mark on people. It is not necessary that a good knowledge of any language can speak with full confidence in that language. There will be a fear in your mind or what people will say till then you will keep it in the conversation. Similarly, if your pronunciation is not correct, then you will feel reluctant to speak out of fear of laughing at you or you will not be able to say it at the right time. To protect you from these problems in the conversation, the 16-page script of the audio cassette that has been provided to you with the book is given at the end of the book. You should read the instructions given in the beginning and with the cassette- Practice correct pronunciation with sure you feel confident after practicing for some time. Will feel and speaking English will feel as if you are speaking your mother tongue

Download Rapidex English Speaking Course 2025 "Pdf"

Rapidex English Speaking Course PDF has proved to be a useful book for the most businessmen all over the world. One of the biggest success of this book. To learn good English. Is helping any businessman want their English spoken English writing and Conversation with English to be very strong, for this they need a good book and to meet these needs Rapidex English Speaking Course Book PDF is helping all businessmen a lot.

This book is necessary for every student, for every professional, for every professional , for the interview for every founder, whatever the company wants to do or whatever is a good software for a good website. Today, all companies, whether Google or Microsoft, are trying to develop, they need good English people and for this, they want that people who know good English in their company For this, it is necessary that he learn good English and for this, English speaking course is helping him learn English.

Rapidex English Speaking Course 2025 "Book PDF Free Download" |

||||||||||

|

||||||||||

Rapidex English Speaking Course 2025 "Book Preview" |

||||||||||

|

||||||||||

Rapidex English Speaking Course 2025 "Download Link"

Watch Rapidex English Speaking Course 2024"Video Lecture"Diznr International Provides you the Complete Video Lecture of Rapidex English Speaking Course Book. This Video Lecture is easy to understand and learn English from Basic. Students should follow this video Lecture and start learning Spoken English.This Video Lecture Will guide you step by step and boost your confidence to speak in English. |

||||||||||

Watch Rapidex English Vocabulary 2025"Dictionary"Learning of English Start From Your word stock, So boost our word stock using our Rapidex English Dictionary Word Meaning Video Lecture.When you have word stock you will feel confident to speak in English. |

Rapidex_English_Speaking_Course

Greetings to all my friends, I have brought the PDF of the Rapidex English Speaking Course book for all of you today so that all of you will start reading this book and speak good English. This book helps all of you to speak good English quickly and learn English. Will help a lot in understanding English, I have also read the book Rapidex English Speaking Course. I understood this book. And this book is also appreciated. Along with me, many of my colleagues also bought the book Rapidex English Speaking Course and they also told the book Rapidex English Speaking Course is the best book to teach English if you want to learn English if you want.

Speaking English if you want to pronounce pure English then definitely all of you guys by me The book to be influenced or the Rapidex English Speaking Course will prove to be very effective. I am presenting this book as a dream to all of you today, hope that all of you will feel happy reading this book and you will feel happy about this book. Would thank the writer that he has designed such a good book for all of you, certainly in the design of a good book. The author of the book has a lot of hard work, that's why I would also request all of you guys to download this book from my website and definitely read it and if you want and you like it, you can read this book Purchase this book today, it is available on a website like Amazon, Flipkart. You can also get this book delivered to your home from here.

It is a must, I have given this book to all of you so that you can read this book, understand this book and see this book, but still we all know that we do read things online but An offline copy of a book is also necessary to keep it for a long time and that's why we all pledge that from today onwards Rapidex English Speaking Course will have to fall every day. We will remember and we will try to speak English. To speak good English, one thing all of you must understand is that whatever is given in this book, you should read it, then remember it. Use in your colloquial language To speak good English, it is important that you learn all the things you are learning in your everyday language.

Download Latest Rapidex English Speaking Course PDF Updated On September 2021 Publication Is Available To Download and this is the most updated and revised version of Rapidex.

Rapidex English Speaking Course book has been uploaded to Google Drive which is an online cloud storage provided by Google where we can keep and share our files and that's why Rapidex English for all of you The PDF of the speaking course book has been uploaded to Google Drive and the link to Google Drive has been shared so that you can download this book You can save by reading and you can read it again in the future, as well as copy of the Rapidex English Speaking Course book has been made available to all.

You on this website if you want, Rapidex English Speaking Course You can also read the book on our website, on our website all of you will get a complete book of Rapidex English Speaking Coursebook. You can also read and download whatever you like, as well as the Rapidex English Speaking Course book has been provided to all of you with speedy and video lectures with the Rapidex English Speaking Course book. All you people are provided, if you want, you can watch this video lecture and you can also download the video if you want, Rapidaex.

Rapidex English Speaking Course Latest Updated January 2025 Publication is Available To Download Click Here

You can also download the audio book of the English Speaking Course book or you can listen to the audio of the English Speaking Course book, all these things are available on our website so that you can speak English and become a successful and good person. Rapidex English Speaking Course book has been uploaded to Google Drive is an online cloud provided by Google where we can keep and share our files and that's why Rapidex English for all of you The PDF of the speaking course book has been uploaded to Google Drive and the link to Google Drive has been shared so that you can download this book.

You can save by reading and you can read it again in the future, as well as copy of the Rapidex English Speaking Course book has been made available to all of you on this website, if you want, Rapidex English Speaking Course You can also read the book on our website, on our website all of you will get a complete book of Rapidex English Speaking Course book. You can also read and download whatever you like, as well as the Rapidex English Speaking Course book has been provided to all of you with speedy and video lectures with the Rapidex English Speaking Course book. All you people are provided, if you want, you can watch this video lecture and you can also download the video if you want, Rapidae You can also download the audio book of the English Speaking Course book or you can listen to the audio of the English Speaking Course book, all these things are available on our website so that you can speak English and become a successful and good person.

[Latest *] PDF Click to Download Rapidex English Speaking Course PDF

Q. What to Do to Speak in English?

A. Follow Rapidex Spoken English Book Video Lecture Provided to You by Diznr International. This Video Lecture gives you the way to speak in English quickly. This is a 60 Days Video Lecture Course.

Q. How to Learn Spoken English Quickly?

A. Watch one video on one day and learn the concept which is taught to you in the Rapidex Spoken English CD Video Course.

Q. In How Many Days You will be Able to Speak in English?

A. In 60 Days Spoken English Course on Diznr International , You will be Able to Speak in English Effectively.

Learn Rapidex Spoken English Course

Rapidex English speaking course book pdf

सभी दोस्तों को मेरा नमस्कार मैंने आज आप सभी लोगों के लिए रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक की पीडीएफ को लाई है ताकि आप सभी लोग इस पुस्तक को पढ़कर अच्छा अंग्रेजी बोलने लगे यह पुस्तक आप सभी लोगों को अच्छी अंग्रेजी बोलने में जल्दी अंग्रेजी सीखने में और अच्छी अंग्रेजी को समझने में बहुत मदद करेगी मैंने खुद भी रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक को पड़ी है इस पुस्तक को समझी है और इस पुस्तक को सराहा भी है मेरे साथ साथ मेरे कई साथियों ने भी रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक को खरीदा था और उन्होंने भी रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक को अंग्रेजी सिखाने वाली सबसे बेहतरीन किताब बताइए अगर आप चाहते हैं अंग्रेजी सीखना अगर आप चाहते हैं अंग्रेजी बोलना अगर आप चाहते हैं शुद्ध शुद्ध अंग्रेजी उच्चारण करना तो निश्चित रूप से आप सभी लोगों को मेरे द्वारा प्रभावित किया जाने वाला या रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक बहुत कारगर साबित होगा मैं आज आप सभी लोगों को सपना के रूप में यह पुस्तक भेंट कर रहा हूं आशा करता हूं कि आप सभी लोग इस पुस्तक को पढ़कर खुशी महसूस करेंगे और आप इस पुस्तक के राइटर को शुक्रिया अदा करेंगे कि उन्होंने आप सभी लोगों के लिए इतनी अच्छी पुस्तक को डिजाइन किया है निश्चित रूप से ही एक अच्छी पुस्तक के डिजाइन में उस पुस्तक के लेखक की बहुत अधिक मेहनत होती है इसीलिए मेरी तरफ से आप सभी लोगों को यह भी निवेदन होगा कि आप इस पुस्तक को मेरी इस वेबसाइट से डाउनलोड कर निश्चित रूप से पढ़ें और अगर आप चाहें और आपको बहुत अच्छा लगे तो आप इस पुस्तक को खरीद कर दी रखिए आज यह पुस्तक अमेजॉन फ्लिपकार्ट जैसी वेबसाइट पर उपलब्ध है

Download Latest Rapidex English Speaking Course PDF Updated For 2024

आप यहां से इस पुस्तक को अपने घर पर भी डिलीवरी करवा सकते हैं यह पुस्तक प्रत्येक व्यक्ति के पास होना ही चाहिए मैंने आप सभी लोगों को यह पुस्तक पढ़ने के लिए दिया है ताकि आप इस पुस्तक को पढ़ सके इस पुस्तक को समझ सके और इस पुस्तक को देख सकें लेकिन फिर भी हम सभी जानते हैं कि हम ऑनलाइन चीजों को जरूर पढ़ते हैं लेकिन इसे लंबे समय तक रखने के लिए एक पुस्तक की ऑफलाइन प्रति भी जरूरी होती है और इसीलिए हम सभी लोग यह प्रण करें कि आज से रैपिडेक्स इंग्लिश स्पीकिंग कोर्स तक को प्रतिदिन पड़ेंगे उसे याद करेंगे और हम अंग्रेजी बोलने का प्रयास करेंगे अच्छा अंग्रेजी बोलने के लिए आप सभी लोगों को एक चीज जरूर समझ लेनी चाहिए कि जो भी चीजें इस पुस्तक में दी गई है इसे आप पढ़ें समझें फिर याद करें इसके बाद जो और अहम बात है आप इसे अपनी बोलचाल की भाषा में उपयोग करें अच्छी अंग्रेजी बोलने के लिए यह जरूरी है कि आप जिन भी चीजों को सीख रहे हैं उसे आप अपनी रोजमर्रा की बोलचाल की भाषा में|प्रिय पाठकों ! – diznr.com आज आप सब Students के लिए Rapidex English speaking course book pdf, English से सम्बंधित बहुत ही महत्वपूर्ण Book लेकर आये है, यह book आपको इंग्लिश बोलना और पढने में काफी मददगार होगी| रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक को गूगल ड्राइव पर अपलोड कर दिया गया है गूगल ड्राइव गूगल के द्वारा प्रोवाइड किया जाने वाला एक ऑनलाइन क्लाउड है जहां पर हम अपने फाइल को रख सकते हैं और शेयर कर सकते हैं और इसीलिए आप सभी लोगों के लिए रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक की पीडीएफ को गूगल ड्राइव पर अपलोड करके गूगल ड्राइव के लिंक को शेयर कर दिया गया है ताकि आप इस पुस्तक को डाउनलोड कर सेव कर सकते हैं और आप फ्यूचर में इसे दोबारा पढ़ सकते हैं इसके साथ ही साथ रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक की पीडीएफ कि आई एम कॉपी भी आप सभी लोगों को इस वेबसाइट पर उपलब्ध करवा दिया गया है आप चाहे तो रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक को हमारी वेबसाइट पर भी पढ़ सकते हैं हमारी वेबसाइट पर आप सभी लोगों को रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक की कंपलीट बुक मिलेगी आपको पढ़ सकते हैं और डाउनलोड भी कर सकते हैं आपको जो भी अच्छा लगे वह कर सकते हैं इसके साथ ही साथ रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक की आप सभी लोगों को स्पीडी और वीडियो लेक्चर प्रोवाइड की गई है जो कि रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक के साथ आप सभी लोगों को प्रोवाइड किया जाता है आप चाहे तो यह वीडियो लेक्चर को देख सकते हैं और आप वीडियो को डाउनलोड भी कर सकते हैं आप चाहें तो रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक की ऑडियो बुक भी डाउनलोड कर सकते हैं या फिर आप इंग्लिश स्पीकिंग कोर्स पुस्तक की ऑडियो सुन सकते हैं यह सभी चीजें हमारी वेबसाइट पर उपलब्ध है ताकि आप अंग्रेजी बोल सके और एक कामयाब और अच्छा व्यक्ति बन सके इस Rapidex English speaking course book pdf को Hindi&English में Writer R.K Gupta के द्वारा लिखा गया है जो की आप सभी को English Spoken में बहुत ही उपयोगी साबित होगा, तो आप सभी इस pdf को free में Download कर के अवस्य पढ़ें, और अपने English को और मजबूत करे.

Rapidex English Speaking Course Pdf Download Link

Download Rapidex English speaking Course Complete PDF

Download Rapidex English Speaking Course Chapter Wise

The Rapidex English Speaking Course has been written in a new way to make it even easier to read and is also looking at a better book than before and in this new course Conversations have also been made easier. Along with making the English language from the point of view of language, it has also been added in this book that how you will speak to all of them is also mentioned in this book.

Book :- Rapidex English Speaking Course

Publisher:- Pustak Mahal Publication

Size:-110 MB (115,843,072 bytes).

What is the meaning of words, how to speak them, all these things have also been told. The words of our words vary according to the time and the words which are spoken in the present time have been added to the book in this way in less words. You have tried to present this book with full ability, you should read this book so that you can speak good English and learn good English, learn to speak English. If you are willing, you have been provided everything to speak English in this book so that you can speak English easily, to make you more useful, you will choose your words and the feelings that are coming in your mind. How to express in English also expresses very well about all the things. It will help a lot to bring out the feelings inside you.

रैपिडेक्स इंग्लिश स्पीकिंग कोर्स आपको अंग्रेजी सीखने और बोलने में बहुत मदद करेगी अगर आप चाहते हैं अंग्रेजी बोलना और सीखना तो आपको इस पुस्तक को सही तरीके से पढ़ना होगा। यह विश्व की सर्वोत्तम पुस्तकों में से एक है।

In the book Rapidex English Speaking Course, all of you people should emphasize on how to speak the sentences in addition to the correct spoken sentences, you will learn in this book how to speak English and your feelings in English. How to express You have a dictionary at the end of this book to speak English well so that you can use some of the words you use daily Remember that it will help you a lot in your speaking when some such methods are also mentioned in the course, by adopting which you will be able to speak good English by choosing the right word at the right time. If you want to make English easier by using words of your own language instead of English words, then gradually you can make English easier. This is why today English speaking course is also available in your mother tongue, if you want, you can read the book Rapidex English Speaking Course in your mother tongue, and I have uploaded it in many different mother tongue languagesfrom my side.You can download English speaking course of mother tongue according to your language.

- If you want to learn English with confidence and want to speak English

- Rapidex English Speaking Course has come in new and updated for if you want to download and read this book

रैपिडेक्स इंग्लिश स्पीकिंग कोर्स मैं आप सिर्फ इंग्लिश सीखना और बोलना ही नहीं बल्कि इंग्लिश ग्रामर को चैप्टर वाइज सिखाएगी यह पुस्तक आपको बोलचाल की भाषा मैं अलग-अलग स्थान पर क्या बोलेंगे किस तरह से कन्वर्सेशन करेंगे किस तरह दूसरों से बातें करेंगे यह सब चीजें इस पुस्तक में बहुत ही अच्छे ढंग से समझाया गया है इस पुस्तक में आप प्रथम दिन से लेकर अंग्रेजी सीखना शुरू करते हैं और 60 दिन में अंग्रेजी सीख जाते हैं इसके साथ ही साथ आप अंग्रेजी की बोलचाल की भाषा को भी बहुत आसानी से पकड़ पाते हैं। रैपिडेक्स इंग्लिश स्पीकिंग कोर्स की पुस्तक के साथ एक सीडी कैसेट भी मिलती है जिसमें आपको वीडियो के माध्यम से अंग्रेजी सिखाने का प्रयास किया गया है इस पुस्तक को फ्री ऑफ कॉस्ट आप नीचे दिए गए लिंक से डाउनलोड कर सकते हैं।

Rapidex English Speaking Course pdf also adds you to the correct pronunciation of English so that you pronounce it correctly in English. People want to learn to speak English but they have different dialect, that's why you need to make your speaking easier as well as English and Making correct English speaking in conversation is different from our native tongue, you should not understand it.Rapidex English Speaking with Rapidex English speaking course book to make the course went to the CD cassette it can also, I see the video is uploaded to your website can video and audio download and read.

रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक में आप सभी लोगों को वाक्यों के सही बोलचाल के साथ साथ वाक्यों को बोलने पर किस प्रकार से जोर देना चाहिए ऐसे भी बताया गया है आप इस पुस्तक में सीखेंगे की अंग्रेजी को बोले कैसे और अपने मन की भावनाओं को अंग्रेजी में व्यक्त कैसे करें आपको अंग्रेजी अच्छा बोलने के लिए इस पुस्तक के अंत में डिक्शनरी भी दी गई है ताकि आप कुछ डेली यूज की जाने वाली शब्दों को याद कर सकें यह आपके बोलचाल में बहुत मदद करेगी जब कोर्स कोर्स में कुछ ऐसे तरीके भी बताए गए हैं जिन्हें अपनाकर आप सही वक्त पर सही शब्द चुनकर अच्छा अंग्रेजी बोलने में सक्षम हो पाएंगे आप अंग्रेजी बोलने मैं बहुत अच्छा हो जाएंगे जैसे कि ऐसी स्थिति में अंग्रेजी शब्दों की जगह अपनी मात्र भाषा के शब्दों का प्रयोग कर अगर आप अंग्रेजी को और आसान बनाना चाहे तो धीरे-धीरे अंग्रेजी को और आसान बना पाएंगे इसीलिए आज इंग्लिश स्पीकिंग कोर्स अपनी मातृभाषा में भी उपलब्ध है आप अगर चाहे तो रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पुस्तक को अपनी मातृभाषा में भी पढ़ सकते हैं मैंने अपनी तरफ से बहुत सारे अलग-अलग मातृभाषा की लैंग्वेज में इसको अपलोड कर दिया है आपकी जो भी मातृभाषा हो आप अपनी भाषा के अनुसार मातृभाषा की इंग्लिश स्पीकिंग कोर्स डाउनलोड कर सकते हैं

Binding:- Paperback

Publisher:- Pustak Mahal

Edition:- December 2025

Size:- 143 MB (150,373,649 bytes)

Rapidex English speaking Course Pdf

- Download -

As we all know Rapidex english speaking course book is also available on regional language like Hindi, Urdu,Punjabi,Nepali,Bengali,English,Bhojpuri,Marathi etc. That’s why i have also tried to make these book available to you in pdf form. So if you want to download the rapidex english speaking course (hindi edition) for free you may download it from diznr.com website.The latest and new version of this book is available on diznr.com website.

RAPIDEX ENGLISH SPEAKING COURSE BOOK IN REGIONAL LANGUAGE

Latest English Spoken Books PDF is know available for kids and Young students to learn and grasp English easily by using rapid English speaking course.

Now I have also provided you the current rapidex English speaking course book in Hindi and rapidex English speaking course book in English.

रैपिडेक्स इंग्लिश स्पीकिंग कोर्स पीडीएफ पूरे वर्ल्ड में सबसे बिजनेसमैन के लिए एक उपयोगी पुस्तक साबित हुआ है यह पुस्तक के फेमस होने का एक सबसे बड़ा कामयाबी यह है कि यह पुस्तक किसी भी कंपनी के फाउंडर सीईओ मैनेजर सीटीओ या फिर किसी भी उच्चाधिकारियों को अच्छी अंग्रेजी सीखने में मदद कर रहा है कोई भी बिजनेस मैन यह चाहते हैं कि उनकी अंग्रेजी की बोलचाल अंग्रेजी की लिखावट और अंग्रेजी के साथ कन्वर्सेशन बहुत मजबूत हो इनके लिए उन्हें एक अच्छी पुस्तक की जरूरत होती है और इन जरूरत को पूरा करने के लिए रैपिडेक्स इंग्लिश स्पीकिंग कोर्स बुक पीडीएफ सभी बिजनेसमैन को बहुत मदद कर रहा है यह पुस्तक प्रत्येक विद्यार्थियों के लिए प्रत्येक प्रोफेशनल व्यक्तियों के लिए प्रत्येक फाउंडर सीईओ के लिए इंटरव्यू के लिए जरूरी है जो भी कंपनी को करना चाह रहे हैं या फिर जो भी एक अच्छा सॉफ्टवेयर एक अच्छा वेबसाइट को डेवलप करना चाह रहे हैं आज चाहे गूगल या माइक्रोसॉफ्ट कंपनी सभी कंपनियों को अच्छी अंग्रेजी जाने वाले व्यक्तियों की जरूरत होती है और इसके लिए वह चाहते हैं कि एक अच्छी अंग्रेजी जानने वाले व्यक्तियों को वह अपने कंपनी में जॉब दें इसके लिए जरूरी हो जाता है कि वह अच्छी अंग्रेजी सीखें और इसके लिए इंग्लिश स्पीकिंग कोर्स अंग्रेजी सीखने में मदद कर रहा है

Rapidex Spoken English Tamil Pdf Free Download

Rapidex Spoken English Tamil PDF free download is very helpful for all of the Tamil speaking people who want to learn and to speak in English Rapidex English course PDF free download Tamil to English is a one of the best book for learning English from Tamil language and Rapidex English speaking course book pdf in Tamil download is available for all of you to download it free from our website Rapidex spoken English to Tamil book pdf is one of the best books for learning and to speak efficiently in English this book contains all of the concept of spoken English and any Tamil native speaking people will be able to speak in English and Rapidex Hindi speaking course through Tamil PDF free download is also available for all of you to download Rapidex English Speaking Course Book.

Rapidex Tamil Book Pdf

Rapidex English Speaking Course testimonials

You can see the proof of the superiority of the book Rapidex English Speaking Course itself through the issue published in the committees of famous newspapers all over the country.

- Rapidex course is the only one that is able to teach everyone to speak and write English in 60 days without any teachers going or school. By- Nagpur Times, Nagpur

- This book written in conversational style can teach to speak English easily. All the essential grammar of English is also understood by reading this book. By- Navbharat Times, Delhi

- It has given the practice of teaching English so well that even in a convent school or a book can prove to be useful. By- Dinamani, Madras

- With an attractive cover and beautiful printing, this book will prove to be highly useful for all men and women, because of being able to teach English in a short time, By- Deccan Chronicle, Secunderabad

- In fact, it is a very useful course, in which people who know Tamil can speak English like a graduate without any problem. By- Sunday Standard, Madras

- The specialty of this book is that it contains a useful list of selected daily-use words with meanings. At the end of each lesson, an attempt to explain some basics of language grammar separately is also undoubtedly praiseworthy. By- Jugantar, Kolkata.

- For a long time, I was looking for a book that gets a good knowledge of English colloquialism as soon as it is read and the Rapidex English Speaking Course met on this criterion. By-Mumbai news, Mumbai

- As much as it is necessary to write the language in a pure and effective manner, the correct pronunciation and correct speech of its words are also necessary and all these qualities are present in the orderly exercises of the book. By- Gujarat Mitra, Surat

- Such a gradual practice to learn English is a specialization. By- Gujarat News, Ahmedabad.

Bestselling book ever

The only source of English spoken learning is Rapidex English Speaking Course and its books available in 14 languages. This book is a concept-level English-speaking book that spread in every corner of India which was liked by people of every language and society. Every section of you have adopted your mother tongue, you can learn English fluently through the 60-day court. This book with 60 lessons will teach you so much English in 60 days that you will be able to speak in English without any problem till now. This book has reached the hands of 100 million people, it must have been at least read a book.

Why is the book Rapidex English Speaking Course so popular?

- Therefore, the book is written in a simple way.

- By adopting this, the fear of speaking English disappears completely from the minds of the readers and I begin to speak English in such a way that human being is their mother tongue.

- Syntax ,grammar, correct pronunciation book teaching everything rapidex english speaking course and in this one book english what is available that a person needs to speak english.

Rapidex English Speaking Course Educational Cassette Script

Some important instructions:

This course is not a common cassette but a result of our long experience and objective search among millions of people, so be sure to read the instructions below to truly benefit from this cassette.

- How is this a part of this book, just listen to uncle and take out the matter of learning English from your mind.

- Use the cassette to read the book at least until the conversion. The appendix is for your additional and special information.

- We have kept the pronunciation of the initial cassette ABC words, we know that by reading the book, you must have learned a lot of English, by practicing this, you will start correcting the pronunciation of English words.

- You are completely free to follow the instructions given in the middle of the cassette by rewinding it and rehearsing the cassette wherever you want at your convenience and practicing with it which is not in the instruction cassette. Here is the box in the script.

- You may miss some words or sentences while practicing conversational conversation and you feel that the sentence is being spoken very quickly, whereas in reality it is not the case that it is natural to have such a pace in the conversation and we want your There is a similar pace in the conversation, so regardless of the words or sentences you miss, practice the next sentences by holding the words and speaking along with them. Will be naturalized like what is set.

- In order to overcome this, what most people will say in speech is a hindrance, to read this, first read the sentences and then practice the sentences by speaking with the cassette, in the same way if you do this practice in front of the glass, you will find it on your own face. You will neither have the confidence to get your word even hesitated and will keep it and you will be ready to become good English and a good leadership in this way, and you will be able to go to a stage somewhere and give a speech in English easily. To be able to speak in English, you need to practice with your mirror.

- And in the end, take this thing in your mind and you will not learn to speak English in two to four days, for this you will have to practice it again and again with all your heart.

The Tap Script

Friends, with the Rapidex English Speaking Course or the caseat and its book is a special gift from you or the cassette will teach you the correct comprehension of English words and sentences, it will also give you an atmosphere to speak English among themselves, thus you can read as well. You will prepare yourself completely for English speaking by listening and repeating along with that chosen. In this you will first learn the pronunciation of the English alphabet on the days of the week, counting the names of 12 months from 1 to 100 and then some such sentences. Which we often use in our everyday life are also given after the cassette's conversations in this and different occasions situations that you need to understand what others are doing in that particular situation. I will not have any kind of problem, so let us start their practice.

Also Read

- Rapidex English Speaking Course Book Online

- Rapidex English Speaking Course Video in Hindi

- Rapidex English Speaking Course Audio in Hindi

- Rapidex English Speaking Course in Hindi PDF Free Download

You can download the Rapidex English Speaking Course Book in Hindi for free from several reputable sources. This book is a comprehensive guide for Hindi speakers aiming to learn English effectively.

Rapidex English Speaking Course – Hindi Edition (PDF)

Rapidex English Speaking Course – Hindi Edition (PDF)

This edition covers essential topics such as:

- Basic grammar rules

- Common phrases and vocabulary

- Conversational English

- Pronunciation guides

- Letter writing and more

You can download the PDF from the Internet Archive:

Download PDF

Rapidex English Speaking Course App (Offline Access)

Rapidex English Speaking Course App (Offline Access)

For mobile users, there's an Android app available that allows offline access to the course:

Download from Google Play Store

Alternative Download Option

Alternative Download Option

Another source for downloading the PDF version of the book is Grammareer:

Download PDF

If you need assistance with specific chapters or topics within the book, feel free to ask!